This has been noticed that the throughput graph is always less attentive. The reason could be that a performance tester does not understand its importance or does not care about it because it is not included in the NFRs. Of course, it is true that Throughput does not fall under the core performance metric category but it does not mean that it can be ignored. Let’s try to understand the importance of throughput graphs in performance testing.

As per standard definition, the unit of information a system can process or transfer in a given amount of time represents Throughput. If you use both LoadRunner and JMeter then you may get two definitions. In LoadRunner, throughput is the amount of data sent by the server to the client. On the other hand, JMeter represents the number of requests sent by a client to the server. Although JMeter has separate ‘sent bytes’ and ‘received bytes’ graphs.

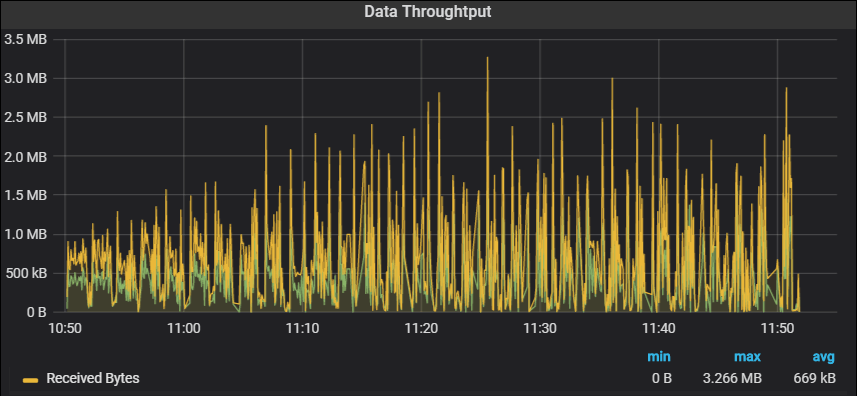

Here, we will discuss LoadRunner’s ‘Throughput’ graph or JMeter’s ‘Received Bytes’ graph.

Purpose

- To measure the amount of data received from the server in bytes, KB or MB

- To identify the network bandwidth issue

Throughput Graph axes represent:

- X-axis: This graph shows elapsed time on X-axis. The elapsed time may be relative time or actual time as per the setting of graph. The X-axis of the graph also shows the complete duration of the test (without applying any filter).

- Y-axis: It represents the amount of data received from server to client (in bytes/KB/MB)

How to read:

The graph line shows how much amount of data is sent by the server in per-second intervals. As I explained in the graph’s purpose section using a throughput graph you can get an idea of network bandwidth issues (if any). A flat throughput graph with an increase in network latency and user load shows an issue in network bandwidth. Apart from this if throughput scales downward as time progresses and the number of users (load) increases, this indicates that the possible bottleneck is at the server end. In that case, you need to merge the throughput graph with the error, user and response time graphs to identify the exact bottleneck.

Merging of Throughput graph with others:

- With User graph: Merge User graph with the Throughput graph and understand the pattern. Ideally, throughput should increase during the user ramp-up period. As the number of users progresses, more data comes from the server. The throughput graphs should remain in a range during the steady state of the test. If you observe a sudden fall in the throughput graph during the steady state of the test then it indicates the server-side issue. What is the issue? That you need to investigate using server logs.

Another scenario is when throughput becomes flat while increasing the number of users then it may lead to the bandwidth issue. To confirm the bandwidth issue you need to merge the throughput graph with Latency. - With Network Latency graph: To confirm the network bandwidth issue you have to look into the Network latency graph by merging it with the throughput graph. If latency increases without an increase in throughput then it’s a Network Bandwidth issue.

- With Response Time graph: Response Time graph can be correlated with the Throughput graph. The increase in response time with constant throughput may be due to network bandwidth issues. To confirm please refer Latency graph. If you see throughput degradation with an increase in response time then start the investigation at the server end.

- With Error per second graph: A throughput graph can be merged with an error per second graph to identify the point when an error starts to occur and what type of error.

Remember: Before making any conclusion, you should properly investigate the root cause of the performance bug by referring to all the related analysis graphs.

You may be interested: